The HPC is changing

We will soon be switching to a new High Performance Cluster, called Double Helix. This will mean that some of the commands you use to connect to the HPC and call modules will change. We will inform you by email when you are switching over, allowing you to make the necessary changes to your scripts. Please check our HPC changeover notes for more details on what will change.

HPC job submission guidelines¶

The following gives more information on how to submit jobs to LSF, including some advanced usage guides.

Interactive jobs¶

Submitting an interactive job to inter queue:

e.g.

bsub -I -q inter <myjob>

[ -I | Is | Ip ] is used for interactive options to bsub

Batch jobs¶

Usage:

bsub -q <queue> -o <_/path/jobout.log_> <_myjob_>

This will submit a job to the -o flag is used to log job output. It is recommended to log job output using -o option for batch jobs.

Various resource strings example

| Job requirement(s) | -R option syntax |

|---|---|

| reserved 10 GB of memory for my job | bsub -R ‘rusage [mem=10000]’ <myjob> |

| reserved 10 GB of memory for my job AND on a single host | bsub -R ‘rusage [mem=10000] [hosts=1]’ <myjob> |

| nodes sorted by cpu and memory and reserved 10GB memory | bsub -R “order[cpu:mem] rusage[mem=10000]” <myjob> |

| nodes ordered by CPU utilisation (lightly loaded first) | bsub -R "order[ut]" <myjob> |

| multi-core jobs (e.g. four cpu cores on single host) | bsub -n 4 -R "span[hosts=1]" <myjob> |

Multicore jobs¶

Sometimes you need to control how the selected processors for a parallel job are distributed across the hosts in the cluster.

You can control this at the job level or at the queue level. The queue specification is ignored if your job specifies its own locality

By default, LSF does allocate the required processors for the job from the available set of processors.

A parallel job may span multiple hosts, with a specifiable number of processes allocated to each host. A job may be scheduled on to a single multiprocessor host to take advantage of its efficient shared memory, or spread out on to multiple hosts to take advantage of their aggregate memory and swap space.The span string supports the following syntax:

span[hosts=1] Indicates that all the processors allocated to this job must be on the same host - please note that available nodes have a maximum of 12 available slots each, so when specifying this flag please use a value not larger than 12 for the -n flag.

e.g.

This will allocate 12 cores on a single machine

This will allocate 36 cores spread across three nodes with xx amount of memory reserved for the job.

Submitting jobs with specific resource requirement (-R ) / Submitting jobs with scratch dependencies¶

To Submit a batch jobs that requires an execution node with 2TB local disk (/scratch) for your job, augment -R dsk option to your bsub. This will target specific nodes in the grid that suffice the requirement

e.g.

OR,

To list all nodes with 2TB local disk /scratch available, either run lshosts or bhosts command.

e.g

OR, e.g

Job dependencies¶

Sometimes, whether a job should start depends on the result of another job. To submit a job that depends on another job:

- use the

-woption to bsub (it is lowercase w) - Select dependency expression

- select dependency condition

Example 1:

Example 2:

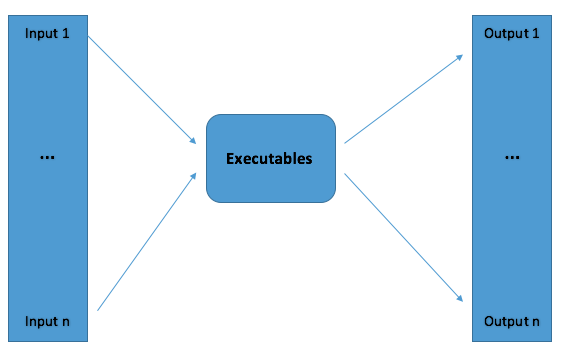

Job arrays¶

A job array is an LSF structure that allows a sequence of jobs to:

- Share the same executable

- Have different input files and output files

In other words, a job array allows mass submission of virtually identical jobs

- Only one submission request is counted.

- Element jobs are counted for

- jobs dispatched

- jobs completed

Syntax:

Where:

-J "ArrayName[…]"names and creates the job arrayindexcan bestart[-end[:step]]-i in.%Iis the input file to be used where %I is the index value of the arraymyjobis the application to be executed

e.g.

To create 1000 jobs each running the myjob script

bsub –J “myArray[1-1000]” –i “in.%I” myJob

To create 1000 jobs each running the myjob script, same time control maximum number of parallel jobs (RUNNING)

bsub –J “myArray[1-1000]%20” –i “in.%I” myJob

1000 jobs will be submitted to LSF, but LSF will RUN 20 jobs at a time. Job throttling is recommended when submitting large number of jobs to allow other cluster users to share the resources the same time (being nice!

To create 1000 jobs each running the myjob script and creates each output file

bsub –J “myArray[1-1000]%20” –i “in.%I” –o “myJob.%J.%I” myJob

Same as above but this time each job creates output file myJob.<jobid>.<jobindex>.

Some more useful example of Array here

https://scicomp.ethz.ch/wiki/Job_arrays

Job groups¶

This is a container of a job or job array allowing you to control a group of jobs rather than individual job. This structured easy management of group of jobs. For example, an application may have one group of jobs that processes data weekly, another job group for processing monthly. You can submit, view, and control jobs according to their groups rather than looking at individual jobs. Job groups can be setup and defined prior to or at job submission. Setting up a job group prior to a job submission:

Set the LSB_DEFAULT_JOBGROUP

Would default all my submitted jobs to this job group

export LSB_DEFAULT_JOBGROUP=<my_jobgroup_name>

Currently, job groups are to be created by HPC admin. So required to submit a ticket with requirement details to create one.

Looking at job groups

bjgroup -s

To attached a job group to your job , use -g <my_jobgroup_name> to bsub

e.g

bgadd –L 10 /NSv4

This will create a job group /NSv4 which limits the number of concurrent running jobs to 10. You can submit as many jobs as you want but LSF will RUN 10 at a time.

To submit a job using the above job group.

bsub -q medium -g /NSv4 -o job.log myjob

You can modify a job group using bgmod. e.g to update the job group limit of job group /NSv4.

bgmod -L 2 /NSv4

Application profile¶

LSF's application profile is used to define common parameters for the same type/similar resource requirement jobs and workflows, i.e. including the execution requirements of the applications, the resources they require, and how they should be run and managed.

It provides you a simpler way to job submission without having to extend all the job's resources requirement. For instance, to submit a job (for the sake of argument lets call the workflow name as nsv4) that requires 44 cpu core, 50 GB of memory and 2 TB /scratch you would normally submit like,

bsub -q medium -n 44 -R ”span[ptile=12] rusage[mem=50000]” -o /path/to/jobout <myjob>

With application profile, the job requirements are predefined in LSF config, so it would allow the submission as:

bsub -q medium-app <application name> -o /path/to/jobout <myjob>

Run bapp. You can view a particular application profile or all profiles define in the cluster. To see the complete configuration for each application profile, run bapp -l. If you require an application profile created for your job, please raise a ticket with the

- preferred name of the profile

- complete list of your jobs resources requirements (viz, number of cores, approximate memory, expected runtime, local disk requirement etc.)

Throttling jobs¶

If you are submitting large quantities of jobs and/or submitting jobs with long run time typically jobs that runs for hours and days, please be mindful of other users in the cluster. We strongly advise to throttle these jobs.

It means you can submit all jobs at once but control the number of concurrent RUNNING jobs at one go. There are a number of ways LSF allows this:

- Control number of running jobs via job groups

To enable job throttling via job groups, You'll need the job groups to be created. We expect to raise an INFRA ticket to enable this with relevant information to create the job groups viz, job group name, who can access this job group, number of running jobs.

e.g. Here is an example of submitting jobs using the job group with name myjobgroup and running job limit of 50. You might submit as many jobs as you wish but LSF will restrict 50 jobs running at one time, then next 50 and so on ...

bsub _-q medium -g /bio/myjobgroup <rest of the submission>

- Control number of running jobs via job array

e.g. To submit an array with 1000 jobs and throttle into 50 running at once

_bsub -q medium –J “myArray[1-1000]%50” <rest of the submission>

- Control number of running jobs via policies/advance reservation/application profile

To enable this, you'll need to raise an INFRA ticket with relevant information to create the policies viz, policy/application profile name, who can access this policies, number of running jobs etc. Submission will be

via application profile

bsub -q medium -app myapp <rest of the submission>

via reservation ID

bsub -q medium -U <Reservation ID> <rest of the submission

Using scripts to submit your job¶

In order to avoid long commands, the arguments explained above can be combined on a bash script, which can then be submitted by only:

Note the < sign above, indicating that your script is fed into the submission command.

The idea of <myscript.sh> is that arguments are now given on the header, where lines start with #, as an example:

To know what each of these options mean, read the documentation above and the introduction here. Note that you can add and remove arguments as needed.